E2F-GAN: Eyes-to-face inpainting via edge-aware coarse-to-fine GANs

Authors: Ahmad Hassanpour, Amir Etefaghi Daryani, Mahdieh Mirmahdi, Kiran Raja, Bian Yang, Christoph Busch, Julian Fierrez

IEEE ACCESS, 2022

Abstract

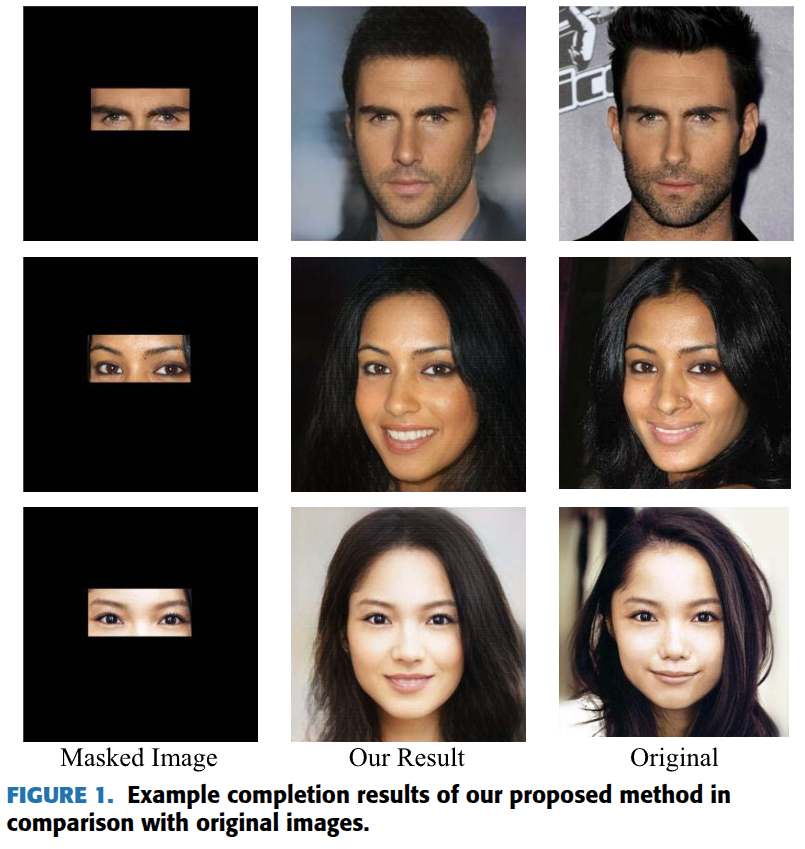

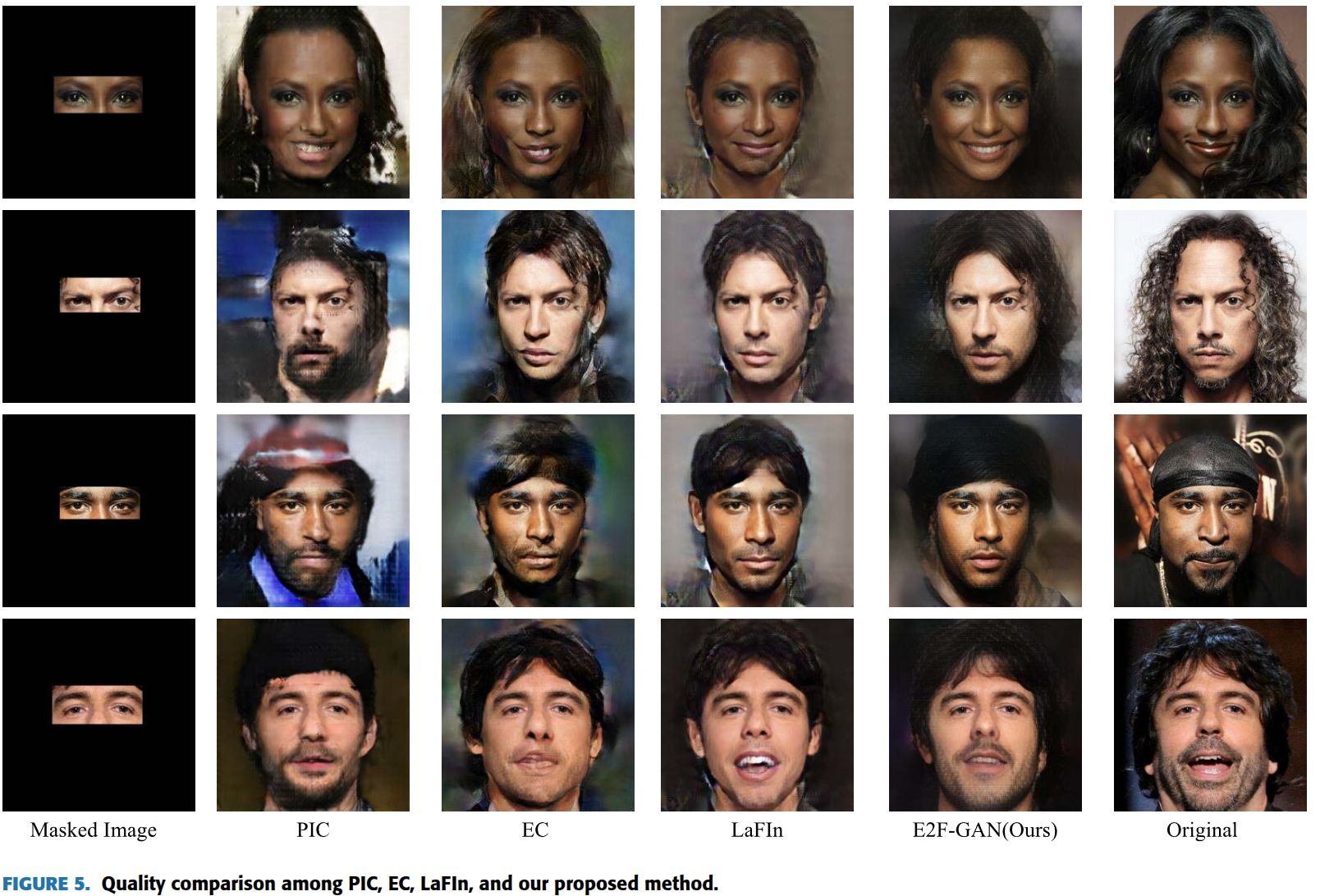

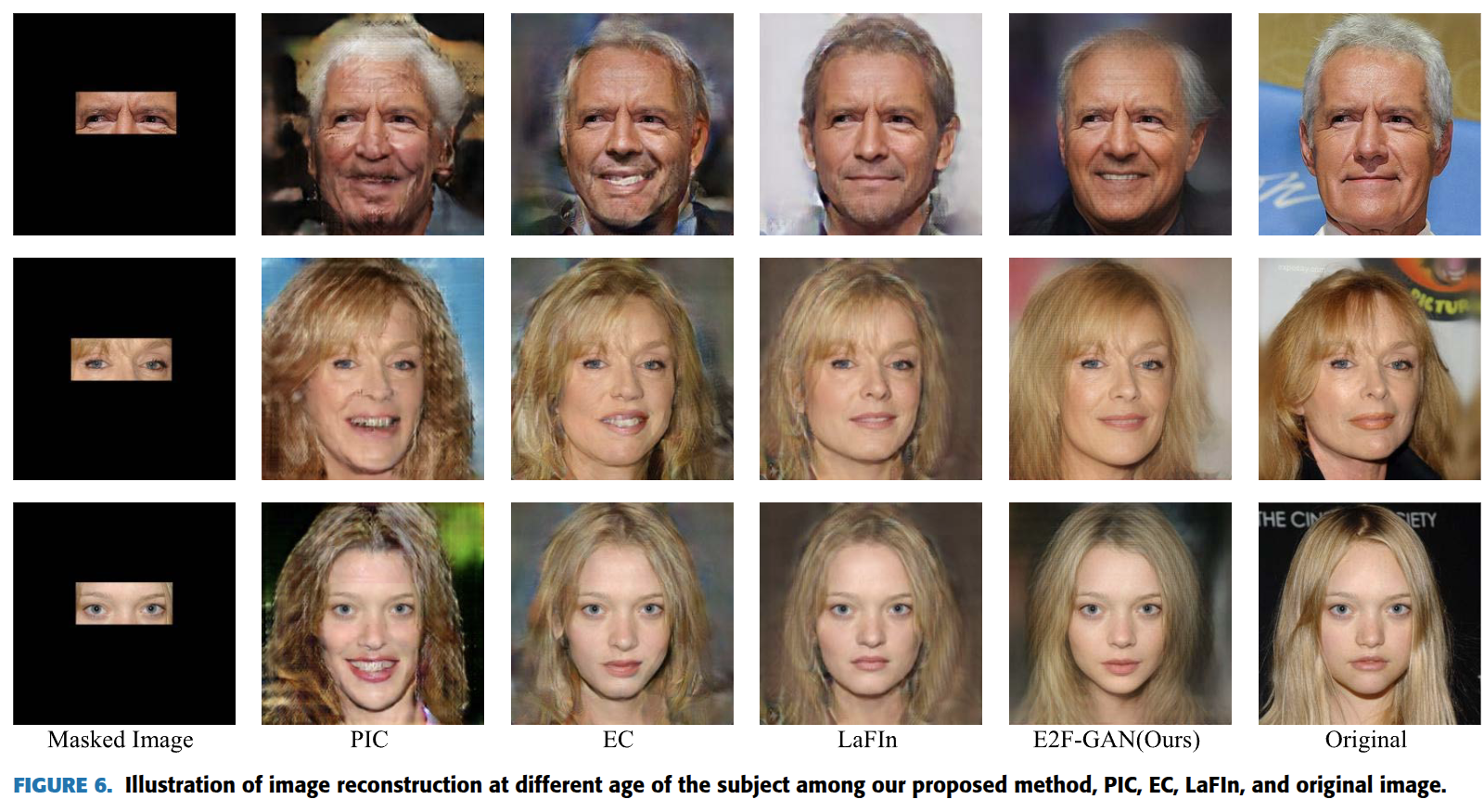

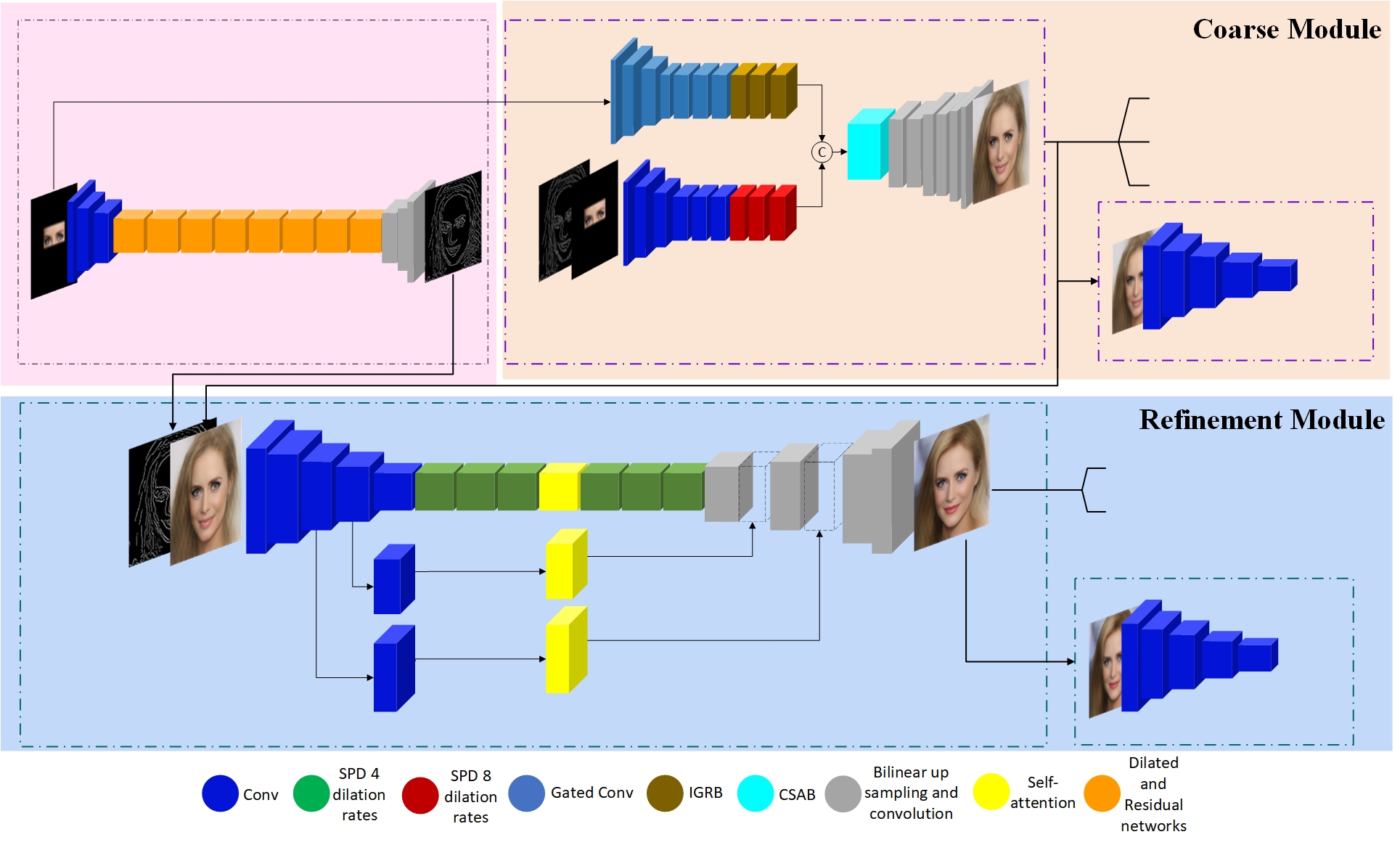

Face inpainting is a challenging task aiming to fill the damaged or masked regions in face images with plausibly synthesized contents. Based on the given information, the reconstructed regions should look realistic and more importantly preserve the demographic and biometric properties of the individual. The aim of this paper is to reconstruct the face based on the periocular region (eyes-to-face). To do this, we proposed a novel GAN-based deep learning model called Eyes-to-Face GAN (E2F-GAN) which includes two main modules: a coarse module and a refinement module. The coarse module along with an edge predictor module attempts to extract all required features from a periocular region and to generate a coarse output which will be refined by a refinement module. Additionally, a dataset of eyes-to-face synthesis has been generated based on the public face dataset called CelebA-HQ for training and testing. Thus, we perform both qualitative and quantitative evaluations on the generated dataset. Experimental results demonstrate that our method outperforms previous learning-based face inpainting methods and generates realistic and semantically plausible images.

Output Images

Bibtex

@ARTICLE{9737139,

author={Hassanpour, Ahmad and Daryani, Amir Etefaghi and Mirmahdi, Mahdieh and Raja, Kiran and Yang, Bian and Busch, Christoph and Fierrez, Julian},

journal={IEEE Access},

title={E2F-GAN: Eyes-to-Face Inpainting via Edge-Aware Coarse-to-Fine GANs},

year={2022},

volume={10},

number={},

pages={32406-32417},

keywords={Face recognition;Faces;Feature extraction;Generators;Task analysis;Image reconstruction;Shape;Face inpainting;generative adversarial networks;image inpainting},

doi={10.1109/ACCESS.2022.3160174}}